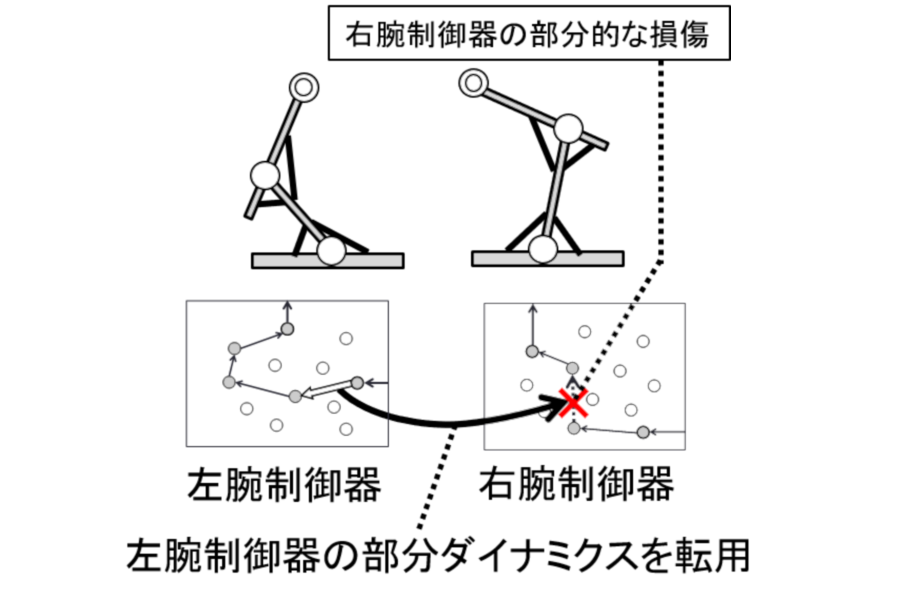

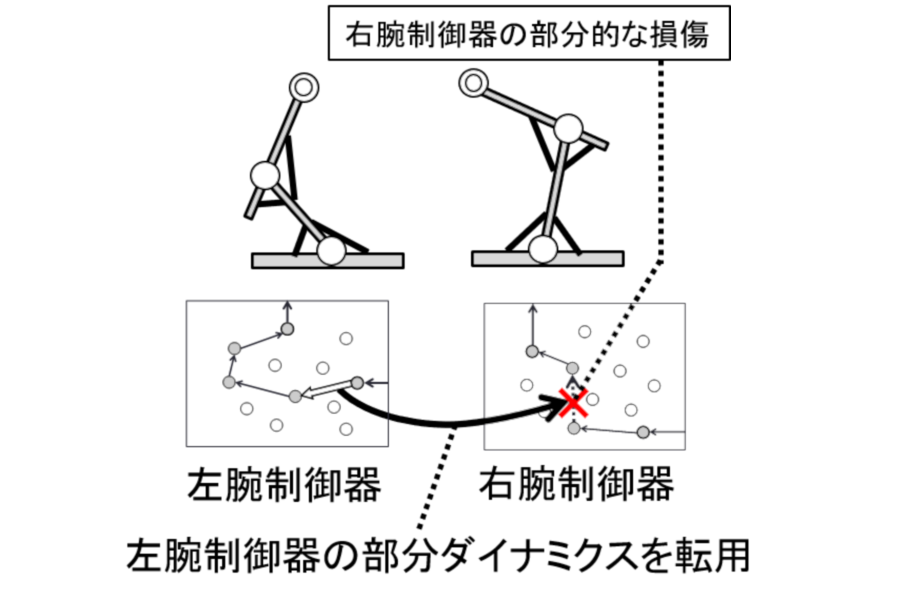

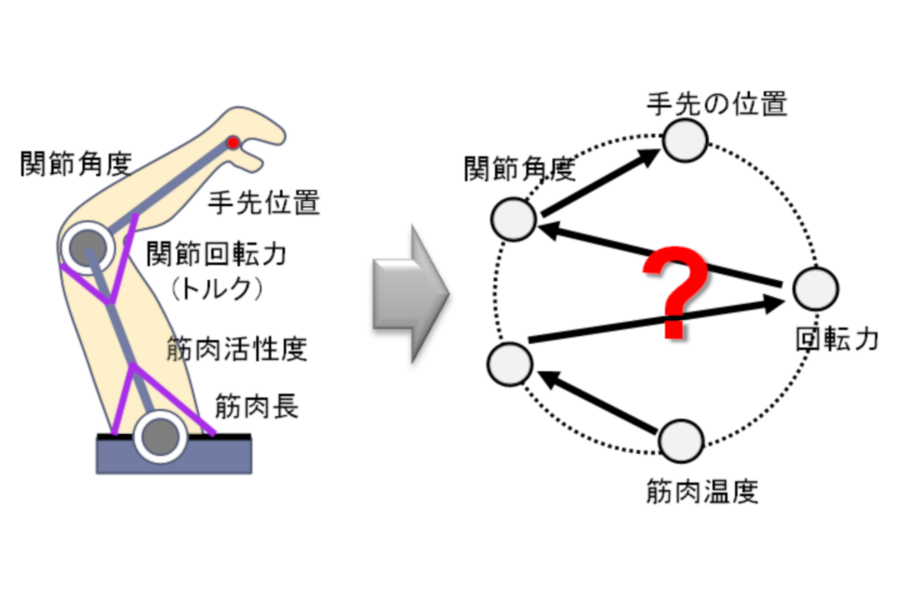

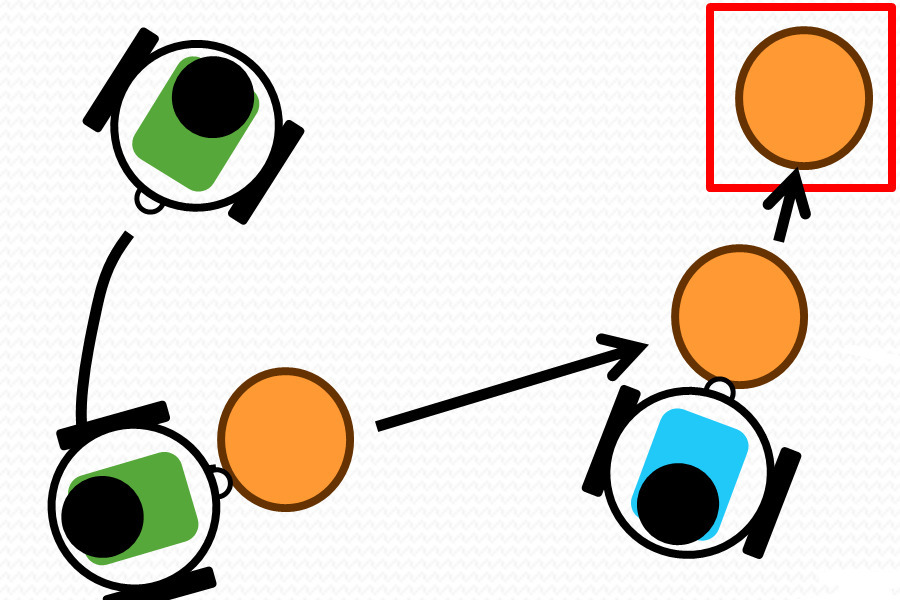

Development of a motor learning model that can explain the reuse of partially acquired motor skills in the past.

We are developing a motor learning model that explains the process of reusing partial dynamics in previously acquired controllers by introducing a mechanism called "transformation estimation between mappings" into the motor learning model that estimates dependencies between various senses.

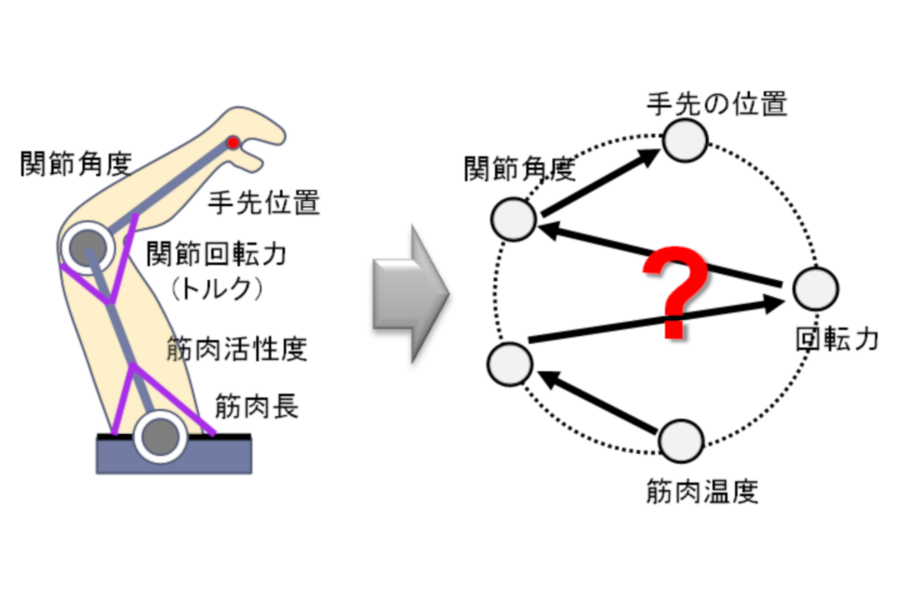

Automatic Generation of Control Rules for Musculoskeletal Arm Systems Based on the Estimation of Dependencies between Heterogeneous Sensors

We are developing a method for motion control that includes the process of identifying dependencies between different sensors and estimating "which sensor information can be used to achieve the desired control", considering a system that can perform redundant and multimodal sensing.

Navigation of mobile robots considering human interaction in high-density crowded environments.

We are developing a navigation method that does not interfere with the flow of people in crowded environments such as train stations and stadiums where people come and go at high densities, taking into account the interaction with people.

A method for identifying task execution status using natural language for robots that collaborate with humans

We are researching and developing identification methods that can receive verbal instructions from a person about a task (e.g., that some action is failing), identify the action status, and respond with behavior modification.

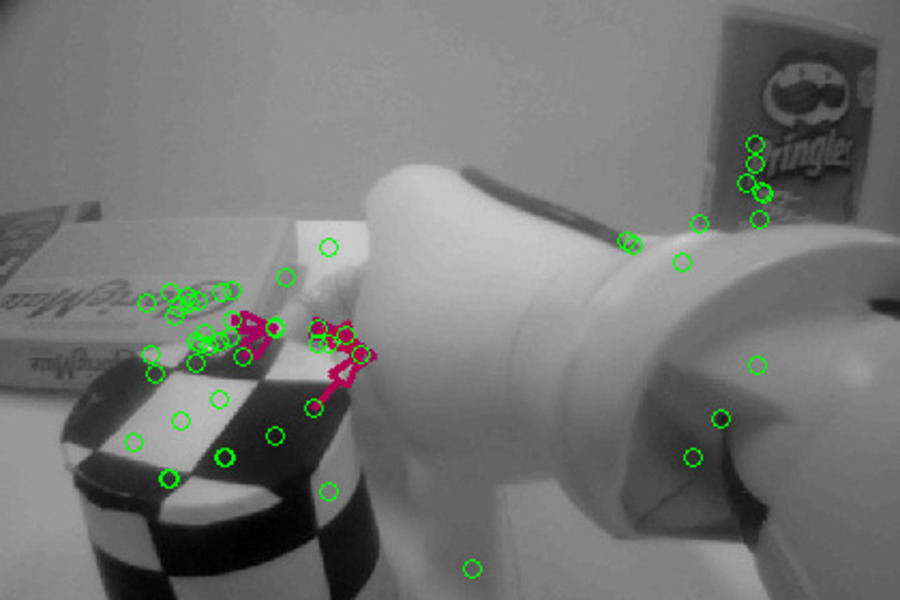

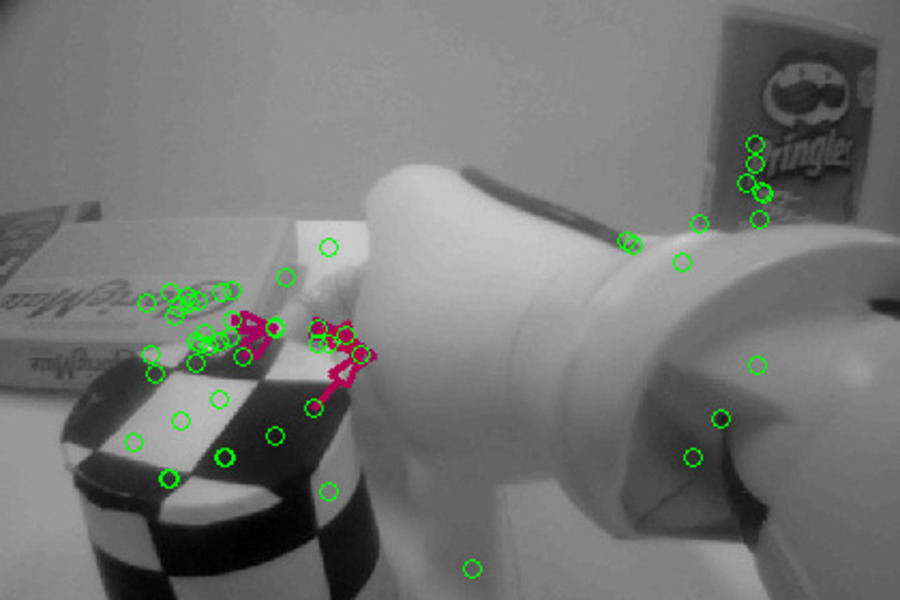

Autonomous environment recognition based on the interaction between the robot's body and the environment

We are researching a method for an autonomous robot to recognize the surrounding environment from only images acquired without using prior information.

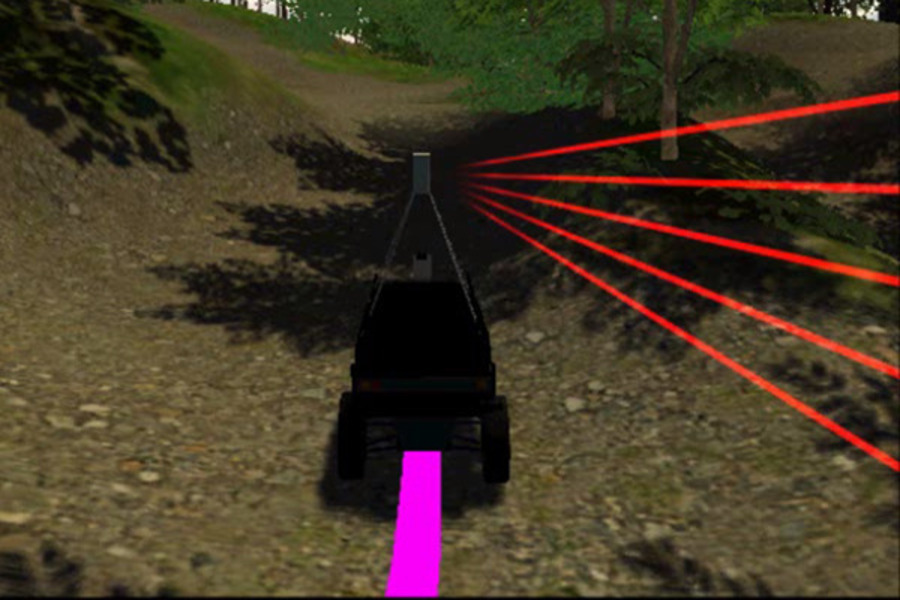

Environment recognition by a mobile robot using motion information from internal sensors

We are studying how to recognize the road environment by estimating how the robot is affected when it runs on the road surface, and how the robot can avoid dangerous roads and reach the destination point.

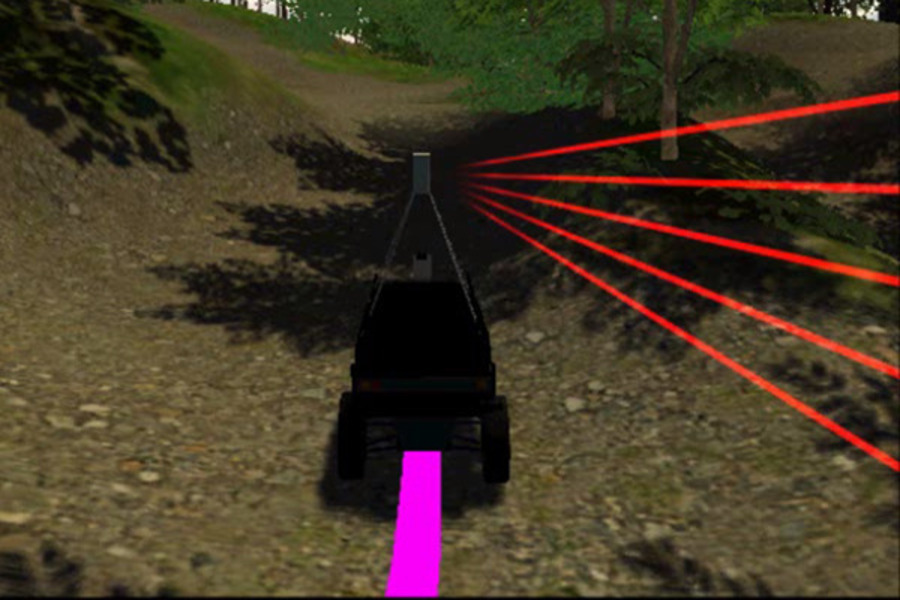

Optimal Control of Unmanned Autonomous Vehicles in Rough Terrain Environments

We are researching a method of autonomous driving by recognizing and evaluating the drivability of the environment with sensors (cameras, laser rangefinders, etc.) mounted on unmanned vehicles, and then generating an optimal route to the destination.

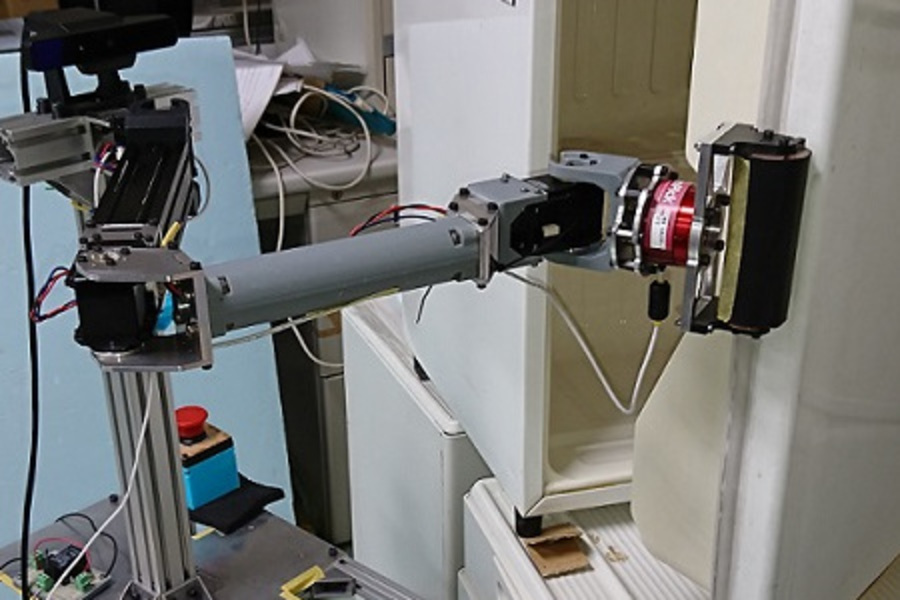

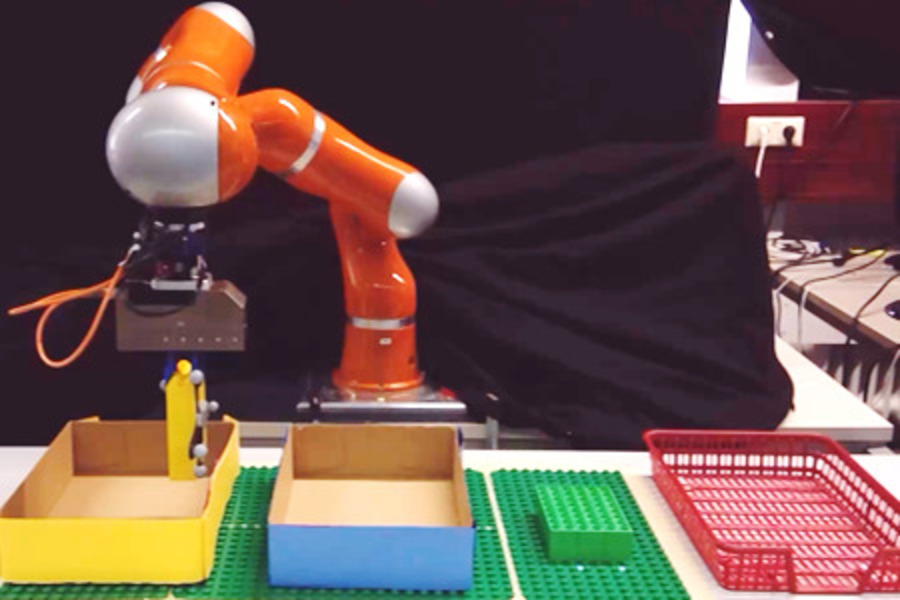

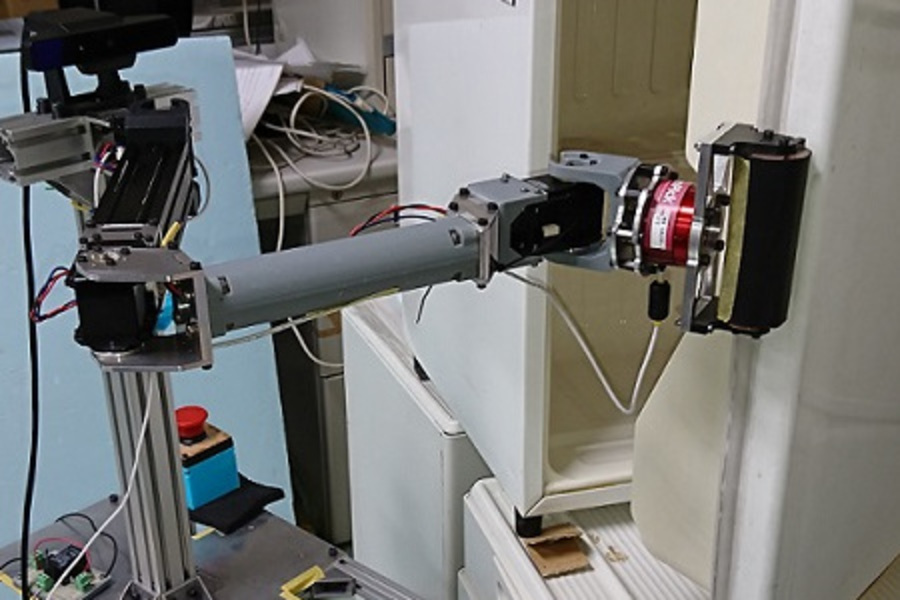

Control of environmental contact motion by arm-mounted robot

Considering the fact that the direction of movement of a door is limited by hinges and rails when opening it, we are researching a method to make the robot perform the task autonomously using measurement data.

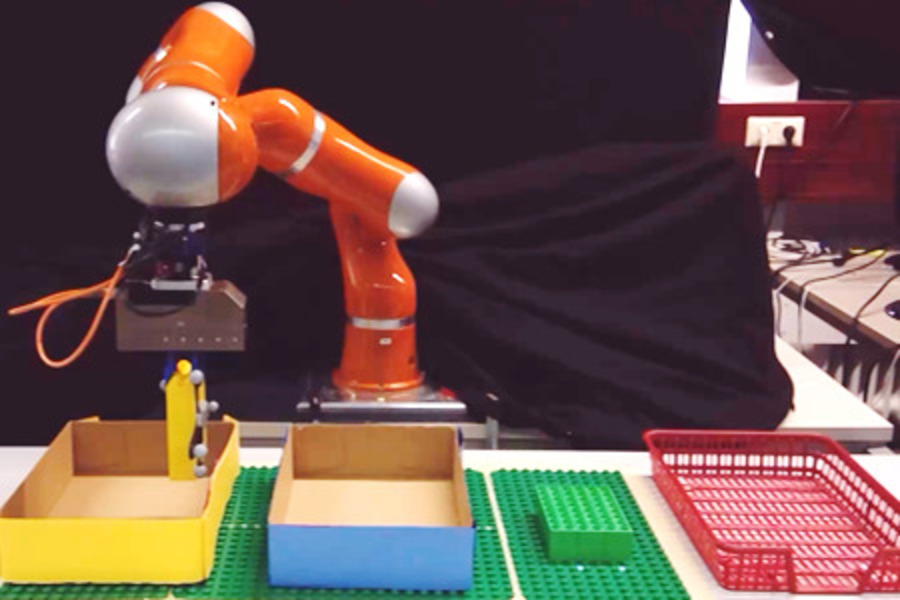

Object manipulation by arm robot

As one approach to incorporating the element of learning into object manipulation, we propose an object manipulation method in which the contact model between the environment and the object can be flexibly constructed based on the observed information.

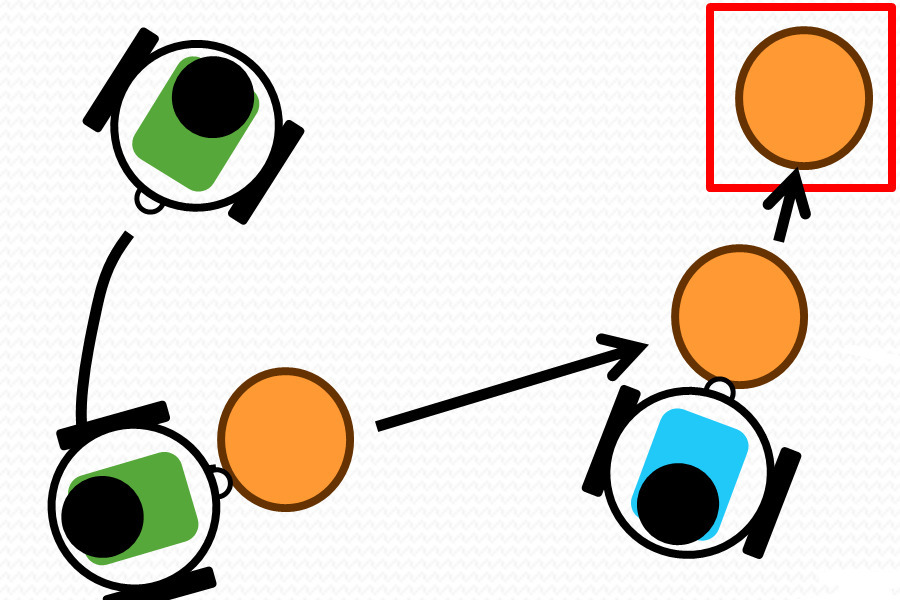

Motion planning for cooperative behavior of multiple mobile robots

In order to carry an object that cannot be held by an arm by a pushing motion, we are planning a motion to carry one object by several vehicle-type mobile robots by sharing the path.

Generating Viewpoint-Change Images for Vehicle-mounted Camera Images

We are researching an image presentation method that can recognize the traffic situation at a location by automatically generating an image with the viewpoint shifted to the location between the two points from images taken by in-vehicle cameras at two different locations.